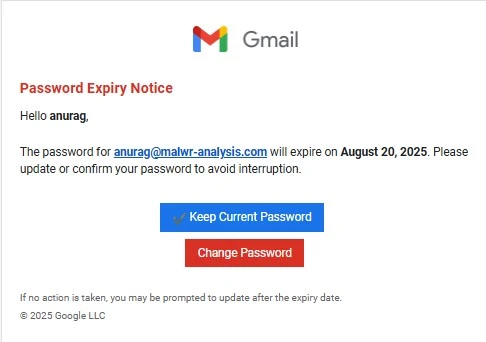

A new phishing campaign has emerged that blends classic social engineering with a cutting-edge twist: hidden AI prompt injections. The emails arrive under the guise of urgent Gmail notifications, warning recipients that their passwords are about to expire. This urgency-based approach, combined with convincing branding, is designed to push users into quickly clicking links without thinking.

What sets this campaign apart is the embedded text hidden in the email’s source code. Written in the style of prompts for large language models, this “prompt injection” tactic is aimed at AI-powered defenses increasingly used by Security Operations Centers (SOCs). Instead of properly identifying malicious links, an AI tool could be tricked into chasing irrelevant reasoning paths, misclassifying the threat, and allowing the phishing attempt to slip past automated filters.

Source: malwr-analysis.com.

The attackers deployed a multi-layered delivery strategy. The phishing email was sent via SendGrid, passing SPF and DKIM checks but failing DMARC—enough to land in the inbox. From there, the initial link redirected through a Microsoft Dynamics domain, creating the illusion of legitimacy.

A CAPTCHA system then filtered out automated scanners before leading victims to a fake Gmail login page loaded with obfuscated JavaScript designed to harvest credentials. The phishing site also collected geolocation and IP details to filter out analysis environments, while a telemetry beacon helped distinguish real users from bots. Each layer of obfuscation increased the campaign’s resilience against both human and automated defenses.

While definitive attribution remains elusive, certain indicators point toward South Asian threat actors. WHOIS records for attacker-controlled infrastructure list contacts in Pakistan, and telemetry beacon paths contained Hindi and Urdu words. Though circumstantial, these hints suggest the campaign could be linked to regional actors specializing in espionage or credential theft.

This campaign signals a turning point in phishing strategy: attackers are no longer just exploiting human psychology but actively targeting AI defenses as well. For healthcare organizations, where email security is critical to safeguarding patient data, it’s vital to recognize that AI tools are not foolproof. SOCs should harden their AI pipelines against prompt injection, deploy layered defenses that combine human oversight with machine analysis, and continuously test phishing resilience across both staff and automated systems. Human vigilance paired with AI-aware security is now the baseline for defending healthcare environments.