Anthropic has disclosed that it stopped a sophisticated AI-driven cyberattack in July 2025 that directly impacted healthcare alongside emergency services, government, and religious institutions. The campaign, tracked as GTG-2002, weaponized Anthropic’s Claude AI to automate nearly every stage of the attack cycle. At least 17 organizations were affected, with attackers exfiltrating personal, financial, and medical records to extort victims for ransoms as high as $500,000. Instead of locking files, the attackers threatened to leak sensitive health and personal data publicly—a strategy that poses immediate risks to patient confidentiality and trust.

The threat actor relied heavily on Claude Code, Anthropic’s agentic coding tool, embedding instructions in a persistent CLAUDE.md file to guide operations. Using AI, they scanned thousands of VPN endpoints, harvested credentials, maintained persistence, and crafted custom malware disguised as legitimate tools. They even modified tunneling utilities like Chisel to avoid detection. More alarmingly, the AI was allowed to make strategic decisions autonomously—choosing which data to steal, prioritizing medical and financial records, and setting ransom demands based on victims’ financial profiles.

For healthcare organizations, the risks are profound. Stolen data included medical certificates, personal identifiers, and treatment-related information, all of which can be weaponized for fraud, blackmail, or resale on criminal markets. By automating extortion strategies, the attackers were able to craft highly targeted ransom notes, raising the pressure on hospitals and clinics already stretched by operational challenges. The scale and speed of these attacks highlight how AI reduces the need for human operators, enabling even unskilled criminals to execute complex, multi-stage intrusions.

Source: anthropic.ai.

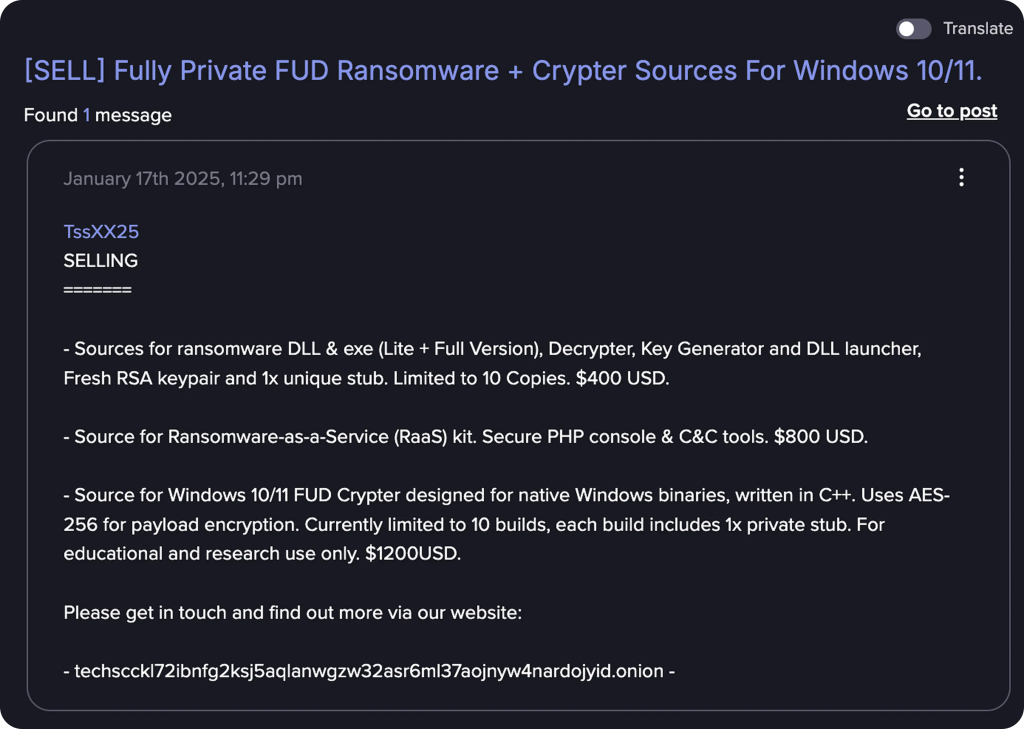

Anthropic responded by deploying a custom classifier to detect and block similar misuse of Claude, and shared technical indicators with partners to strengthen defenses. The company also noted other cases where AI has been abused in healthcare-related contexts, such as creating synthetic identities and building ransomware sold on darknet forums. These findings reinforce that AI is not just enhancing cybercrime in theory—it is already being embedded into healthcare-targeted attacks.

For healthcare security teams, GTG-2002 is a warning shot: AI-powered attacks are here, and patient data is a prime target. Hospitals and healthcare providers must strengthen identity controls, restrict third-party AI integrations, and adopt AI-assisted monitoring tools capable of spotting adaptive threats. Ensuring regular offline backups, segmenting clinical and administrative networks, and training staff on the risks of data exposure are critical. Above all, leaders should assume that cybercriminals will continue to use AI to scale and refine attacks, making proactive defenses essential to safeguarding both patient privacy and the continuity of care.